In the decade since founder and CEO Jensen Huang announced that powering artificial intelligence workloads in the enterprise would be a cornerstone of the company’s plans going forward, Nvidia has relentlessly been expanding its AI and machine learning capabilities with GPUs, software, libraries and integrated appliances like DGX-2.

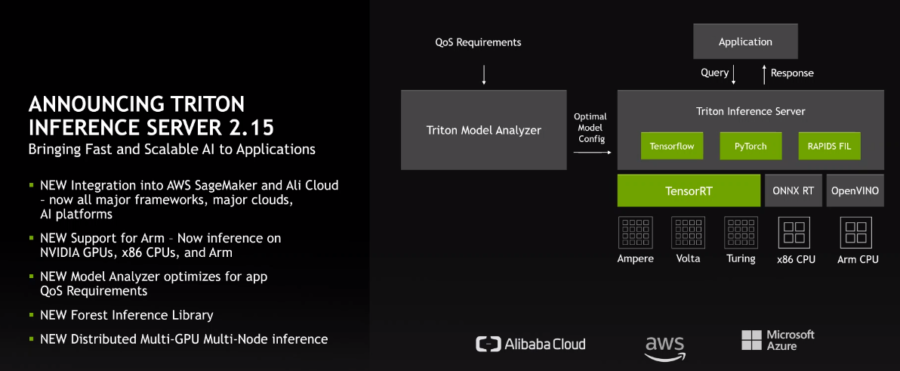

Earlier this year, the company launched its Enterprise AI software suite of tools and frameworks aimed at enabling enterprises to leverage AI at scale, particularly in on-premises data centers and private clouds through VMware’s vSphere cloud platform. A key part of Enterprise AI is Nvidia’s Triton Inference Server, a platform for inferencing on AI models and frameworks in the data center and the cloud and, increasingly, at the edge.

At Nvidia’s virtual GTC event this week, the company unveiled a range of new offerings and enhancements to its inference software – including Triton and Nvidia’s TensorRT software development kit (SDK) – to make it faster, more flexible and able to run more easily in highly distributed IT environments.

Also read: The Coming AI Threats We Aren’t Prepared For

Inference in AI

Inference is becoming increasingly important in AI environments, putting to use the vast amounts of training that AI models go through. If the training aspect of AI is like going to college, inference is what happens when the student graduates and leverages what they’ve learned in the working world.

As the amount of data increases and AI is used for such applications as fraud detection and autonomous driving, the need for systems to be able to take in and process the data and infer from it – particularly in as close to real time as possible – becomes increasingly important.

AI inferencing is difficult on multiple fronts, Huang said during his GTC keynote. There are high levels of computational intensity and the amount of data that comes with AI workloads is increasing.

“AI applications have different requirements – response time, batch throughput, continuous streaming,” the CEO said. “Different use cases use different models and deep learning architectures are complex. There are different frameworks. There are different machine learning platforms. There are different platforms with different operating environments, from cloud, to enterprise, to edge, to embedded. There are different confidentiality, security, functional safety and reliability requirements.”

Enterprises use a wide range of CPUs and GPUs, each with different capabilities and performance characteristics.

“The combination of all those requirements is gigantic,” Huang said. “Inferencing is arguably one of the most technically challenging runtime engines the world has ever seen.”

Also read: China May Lead the AI Race, But Expect Fierce Competition

Triton Gains Traction

More than 25,000 organizations are using Triton Inferencing Server, including such names as Microsoft, Samsung, Siemens Energy and Capital One. It can be run on both CPUs and GPUs located in data centers and private and public clouds – it’s integrated into Amazon Web Services (AWS), Microsoft Azure and Google Cloud – as well as at the edge.

It can inference for images, video, language, speech and models of all kinds and enables enterprises that are developing their own AI models to put them into products, Huang told journalists during a virtual news conference during the show.

The latest iteration of Triton Inference Server – version 2.15 – comes with a new Model Analyzer that automatically determines the best configuration for AI models, searching among the different computers and CPU and GPU options, dynamic batching requirements and concurrency paths to meet the workload demand.

Multi-GPU, Multi-Node Support

In addition, the software now supports both multiple-GPU and multiple-node computing, which is important as the workloads get larger and more complex and the IT environment becomes increasingly distributed.

“As these models are growing exponentially, particularly in new use cases, they’re often getting too big for you to run on a single CPU or even a single server,” Ian Buck, vice president and general manager of Nvidia’s Tesla data center business, said during a press briefing.

The need to run these workloads in real time is increasing, Buck said. “The new version of Triton actually supports distributed inference. We take the model and we split it across multiple GPUs and multiple servers to deliver that to optimize the computing to deliver the fastest possible performance of these incredibly large models.”

In addition, Triton now supports Arm’s chip architecture, adding it to a list that includes x86 chips from Intel and AMD and Nvidia’s GPUs, and can run inference jobs on deep learning (DL) as well as machine learning (ML) models, due to a new Forest Inferencing Library.

The new library includes what’s called the “random forest” machine learning process for such tasks as classification and regression and gradient-boosted decision tree model.

“Tree models are ubiquitous, especially in finance,” Huang said. “It is naturally explainable and new predictive features can be added-on without fear of regressions.”

Also read: Databricks’ $1 Billion Funding Round Puts Focus on Data Management, AI

Fraud Detection at Scale

Using a fraud detection dataset, Huang showed that with small tree models, both CPUs and GPUs can hit the necessary mark of 1.5 milliseconds for detecting fraud and blocking the transaction.

“However, with large trees needed for better detection rates, inference time remains under 1.5ms for a GPU, while a CPU now takes 3.5ms – way too slow to block the transaction,” Huang said. “With this release [of Triton], we open Nvidia GPUs to the world of classical ML inferencing. Now, with one inference platform, Triton lets you inference DL and ML, on GPUs and CPUs.”

Nvidia also is integrating Triton into AWS’ SageMaker fully managed machine learning platform.

In addition, the company is integrating TensorRT with the open TensorFlow and PyTorch AI frameworks. With this move, version 8.2 of TensorRT – which optimizes AI models and provides a runtime for high-performance inference on Nvidia’s GPUs – provides performance that is up to three times faster than in-framework and does so with a single line of code.

“Many developers inference directly from the frameworks,” Huang said. “It’s easy, it always works, but it is slow. Now, with one line of code, ML developers can get a 3x boost without lifting a finger. Just one line of code.”

At the same time, the latest version, with such optimization as accelerating deep-learning inference and providing high throughput and low latency, large language models with billions of parameters, can be run in real time, the company said.

Nvidia also introduced its A2 Tensor Core GPU, a low-power, entry-level accelerator for AI inference workloads at the edge that the company said will offer up to 20 times the performance than CPUs. The energy-efficient chip consumes 40 to 60 watts, and along with Nvidia’s A100 and A30 Tensor Core GPUs, gives the vendor a more complete AI inference portfolio.

Further reading: NVIDIA’s Huang Gets Overdue Recognition